You have a highly sensitive BigQuery workload that contains personally identifiable information (Pll) that you want to ensure is not accessible from the internet. To prevent data exfiltration only requests from authorized IP addresses are allowed to query your BigQuery tables.

What should you do?

A customer terminates an engineer and needs to make sure the engineer's Google account is automatically deprovisioned.

What should the customer do?

Your company conducts clinical trials and needs to analyze the results of a recent study that are stored in BigQuery. The interval when the medicine was taken contains start and stop dates The interval data is critical to the analysis, but specific dates may identify a particular batch and introduce bias You need to obfuscate the start and end dates for each row and preserve the interval data.

What should you do?

Your organization is migrating its primary web application from on-premises to Google Kubernetes Engine (GKE). You must advise the development team on how to grant their applications access to Google Cloud services from within GKE according to security recommended practices. What should you do?

You have created an OS image that is hardened per your organization’s security standards and is being stored in a project managed by the security team. As a Google Cloud administrator, you need to make sure all VMs in your Google Cloud organization can only use that specific OS image while minimizing operational overhead. What should you do? (Choose two.)

You manage your organization’s Security Operations Center (SOC). You currently monitor and detect network traffic anomalies in your VPCs based on network logs. However, you want to explore your environment using network payloads and headers. Which Google Cloud product should you use?

Your organization develops software involved in many open source projects and is concerned about software supply chain threats You need to deliver provenance for the build to demonstrate the software is untampered.

What should you do?

You are the security admin of your company. You have 3,000 objects in your Cloud Storage bucket. You do not want to manage access to each object individually. You also do not want the uploader of an object to always have full control of the object. However, you want to use Cloud Audit Logs to manage access to your bucket.

What should you do?

Your company has multiple teams needing access to specific datasets across various Google Cloud data services for different projects. You need to ensure that team members can only access the data relevant to their projects and prevent unauthorized access to sensitive information within BigQuery, Cloud Storage, and Cloud SQL. What should you do?

You plan to use a Google Cloud Armor policy to prevent common attacks such as cross-site scripting (XSS) and SQL injection (SQLi) from reaching your web application's backend. What are two requirements for using Google Cloud Armor security policies? (Choose two.)

Which Google Cloud service should you use to enforce access control policies for applications and resources?

Your organization processes sensitive health information. You want to ensure that data is encrypted while in use by the virtual machines (VMs). You must create a policy that is enforced across the entire organization.

What should you do?

You are in charge of creating a new Google Cloud organization for your company. Which two actions should you take when creating the super administrator accounts? (Choose two.)

Your company has deployed an artificial intelligence model in a central project. As this model has a lot of sensitive intellectual property and must be kept strictly isolated from the internet, you must expose the model endpoint only to a defined list of projects in your organization. What should you do?

A patch for a vulnerability has been released, and a DevOps team needs to update their running containers in Google Kubernetes Engine (GKE).

How should the DevOps team accomplish this?

The CISO of your highly regulated organization has mandated that all AI applications running in production must be based on Google first-party models. Your security team has now implemented the Model Garden's organization policy meant to centrally control access and user actions on these approved models at the production folder level. However, it appears that someone has overwritten the policy. This has allowed developers to access third-party models on a particular production project. You need to resolve the issue with a solution that prevents a repeat occurrence. What should you do?

You are creating an internal App Engine application that needs to access a user’s Google Drive on the user’s behalf. Your company does not want to rely on the current user’s credentials. It also wants to follow Google- recommended practices.

What should you do?

An organization is moving applications to Google Cloud while maintaining a few mission-critical applications on-premises. The organization must transfer the data at a bandwidth of at least 50 Gbps. What should they use to ensure secure continued connectivity between sites?

You are the security admin of your company. Your development team creates multiple GCP projects under the "implementation" folder for several dev, staging, and production workloads. You want to prevent data exfiltration by malicious insiders or compromised code by setting up a security perimeter. However, you do not want to restrict communication between the projects.

What should you do?

Your DevOps team uses Packer to build Compute Engine images by using this process:

1 Create an ephemeral Compute Engine VM.

2 Copy a binary from a Cloud Storage bucket to the VM's file system.

3 Update the VM's package manager.

4 Install external packages from the internet onto the VM.

Your security team just enabled the organizational policy. consrraints/compure.vnExtemallpAccess. to restrict the usage of public IP Addresses on VMs. In response your DevOps team updated their scripts to remove public IP addresses on the Compute Engine VMs however the build pipeline is failing due to connectivity issues.

What should you do?

Choose 2 answers

Your organization enforces a custom organization policy that disables the use of Compute Engine VM instances with external IP addresses. However, a regulated business unit requires an exception to temporarily use external IPs for a third-party audit process. The regulated business workload must comply with least privilege principles and minimize policy drift. You need to ensure secure policy management and proper handling. What should you do?

Your organization acquired a new workload. The Web and Application (App) servers will be running on Compute Engine in a newly created custom VPC. You are responsible for configuring a secure network communication solution that meets the following requirements:

Only allows communication between the Web and App tiers.

Enforces consistent network security when autoscaling the Web and App tiers.

Prevents Compute Engine Instance Admins from altering network traffic.

What should you do?

You are running a workload which processes very sensitive data that is intended to be used downstream by data scientists to train further models. The security team has very strict requirements around data handling and encryption, approved workloads, as well as separation of duties for the users of the output of the workload. You need to build the environment to support these requirements. What should you do?

Your organization needs to restrict the types of Google Cloud services that can be deployed within specific folders to enforce compliance requirements. You must apply these restrictions only to the designated folders without affecting other parts of the resource hierarchy. You want to use the most efficient and simple method. What should you do?

You need to enforce a security policy in your Google Cloud organization that prevents users from exposing objects in their buckets externally. There are currently no buckets in your organization. Which solution should you implement proactively to achieve this goal with the least operational overhead?

You are deploying a web application hosted on Compute Engine. A business requirement mandates that application logs are preserved for 12 years and data is kept within European boundaries. You want to implement a storage solution that minimizes overhead and is cost-effective. What should you do?

Your company is developing a new application for your organization. The application consists of two Cloud Run services, service A and service B. Service A provides a web-based user front-end. Service B provides back-end services that are called by service A. You need to set up identity and access management for the application. Your solution should follow the principle of least privilege. What should you do?

Your organization wants to be continuously evaluated against CIS Google Cloud Computing Foundations Benchmark v1 3 0 (CIS Google Cloud Foundation 1 3). Some of the controls are irrelevant to your organization and must be disregarded in evaluation. You need to create an automated system or process to ensure that only the relevant controls are evaluated.

What should you do?

You want data on Compute Engine disks to be encrypted at rest with keys managed by Cloud Key Management Service (KMS). Cloud Identity and Access Management (IAM) permissions to these keys must be managed in a grouped way because the permissions should be the same for all keys.

What should you do?

Your company requires the security and network engineering teams to identify all network anomalies within and across VPCs, internal traffic from VMs to VMs, traffic between end locations on the internet and VMs, and traffic between VMs to Google Cloud services in production. Which method should you use?

Your global defense company is migrating top-secret classified data to BigQuery and Cloud Storage. National security regulations demand that master encryption key material never leaves the accredited on-premises cryptographic hardware. You must retain the unilateral ability to revoke data access, independent of any cloud provider. What should you do?

Your organization is using Google Cloud to develop and host its applications. Following Google-recommended practices, the team has created dedicated projects for development and production. Your development team is located in Canada and Germany. The operations team works exclusively from Germany to adhere to local laws. You need to ensure that admin access to Google Cloud APIs is restricted to these countries and environments. What should you do?

Your organization is using Vertex AI Workbench Instances. You must ensure that newly deployed instances are automatically kept up-to-date and that users cannot accidentally alter settings in the operating system. What should you do?

A customer needs an alternative to storing their plain text secrets in their source-code management (SCM) system.

How should the customer achieve this using Google Cloud Platform?

Your team wants to centrally manage GCP IAM permissions from their on-premises Active Directory Service. Your team wants to manage permissions by AD group membership.

What should your team do to meet these requirements?

Your organization is rolling out a new continuous integration and delivery (CI/CD) process to deploy infrastructure and applications in Google Cloud Many teams will use their own instances of the CI/CD workflow It will run on Google Kubernetes Engine (GKE) The CI/CD pipelines must be designed to securely access Google Cloud APIs

What should you do?

Your organization is using GitHub Actions as a continuous integration and delivery (Cl/CD) platform. You must enable access to Google Cloud resources from the Cl/CD pipelines in the most secure way.

What should you do?

An organization is starting to move its infrastructure from its on-premises environment to Google Cloud Platform (GCP). The first step the organization wants to take is to migrate its ongoing data backup and disaster recovery solutions to GCP. The organization's on-premises production environment is going to be the next phase for migration to GCP. Stable networking connectivity between the on-premises environment and GCP is also being implemented.

Which GCP solution should the organization use?

Your company has deployed an artificial intelligence model in a central project. This model has a lot of sensitive intellectual property and must be kept strictly isolated from the internet. You must expose the model endpoint only to a defined list of projects in your organization. What should you do?

You are working with a client who plans to migrate their data to Google Cloud. You are responsible for recommending an encryption service to manage their encrypted keys. You have the following requirements:

The master key must be rotated at least once every 45 days.

The solution that stores the master key must be FIPS 140-2 Level 3 validated.

The master key must be stored in multiple regions within the US for redundancy.

Which solution meets these requirements?

Your organization recently activated the Security Command Center {SCO standard tier. There are a few Cloud Storage buckets that were accidentally made accessible to the public. You need to investigate the impact of the incident and remediate it.

What should you do?

Your organization wants to protect its supply chain from attacks. You need to automatically scan your deployment pipeline for vulnerabilities and ensure only scanned and verified containers can be executed in your production environment. You want to minimize management overhead. What should you do?

You run applications on Cloud Run. You already enabled container analysis for vulnerability scanning. However, you are concerned about the lack of control on the applications that are deployed. You must ensure that only trusted container images are deployed on Cloud Run.

What should you do?

Choose 2 answers

Your organization wants full control of the keys used to encrypt data at rest in their Google Cloud environments. Keys must be generated and stored outside of Google and integrate with many Google Services including BigQuery.

What should you do?

You manage one of your organization's Google Cloud projects (Project A). AVPC Service Control (SC) perimeter is blocking API access requests to this project including Pub/Sub. A resource running under a service account in another project (Project B) needs to collect messages from a Pub/Sub topic in your project Project B is not included in a VPC SC perimeter. You need to provide access from Project B to the Pub/Sub topic in Project A using the principle of least

Privilege.

What should you do?

You discovered that sensitive personally identifiable information (PII) is being ingested to your Google Cloud environment in the daily ETL process from an on-premises environment to your BigQuery datasets. You need to redact this data to obfuscate the PII, but need to re-identify it for data analytics purposes. Which components should you use in your solution? (Choose two.)

You are setting up a new Cloud Storage bucket in your environment that is encrypted with a customer managed encryption key (CMEK). The CMEK is stored in Cloud Key Management Service (KMS). in project "pr j -a", and the Cloud Storage bucket will use project "prj-b". The key is backed by a Cloud Hardware Security Module (HSM) and resides in the region europe-west3. Your storage bucket will be located in the region europe-west1. When you create the bucket, you cannot access the key. and you need to troubleshoot why.

What has caused the access issue?

A customer wants to move their sensitive workloads to a Compute Engine-based cluster using Managed Instance Groups (MIGs). The jobs are bursty and must be completed quickly. They have a requirement to be able to manage and rotate the encryption keys.

Which boot disk encryption solution should you use on the cluster to meet this customer’s requirements?

Your organization operates a hybrid cloud environment and has recently deployed a private Artifact Registry repository in Google Cloud. On-premises developers cannot resolve the Artifact Registry hostname and therefore cannot push or pull artifacts. You've verified the following:

Connectivity to Google Cloud is established by Cloud VPN or Cloud Interconnect.

No custom DNS configurations exist on-premises.

There is no route to the internet from the on-premises network.

You need to identify the cause and enable the developers to push and pull artifacts. What is likely causing the issue and what should you do to fix the issue?

In an effort for your company messaging app to comply with FIPS 140-2, a decision was made to use GCP compute and network services. The messaging app architecture includes a Managed Instance Group (MIG) that controls a cluster of Compute Engine instances. The instances use Local SSDs for data caching and UDP for instance-to-instance communications. The app development team is willing to make any changes necessary to comply with the standard

Which options should you recommend to meet the requirements?

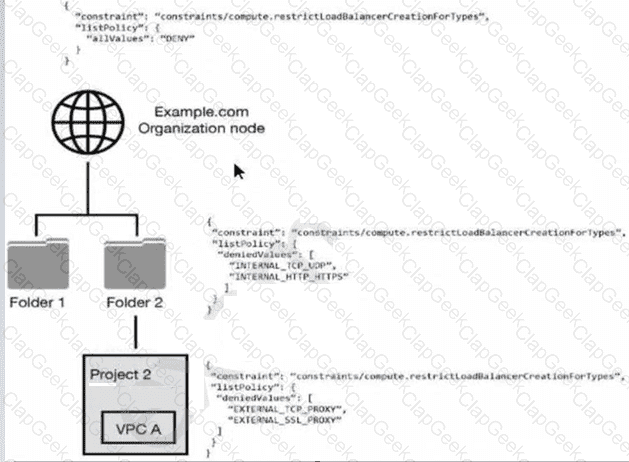

You have the following resource hierarchy. There is an organization policy at each node in the hierarchy as shown. Which load balancer types are denied in VPC A?

Your organization has implemented synchronization and SAML federation between Cloud Identity and Microsoft Active Directory. You want to reduce the risk of Google Cloud user accounts being compromised. What should you do?

You are responsible for protecting highly sensitive data in BigQuery. Your operations teams need access to this data, but given privacy regulations, you want to ensure that they cannot read the sensitive fields such as email addresses and first names. These specific sensitive fields should only be available on a need-to-know basis to the HR team. What should you do?

Your company has deployed an artificial intelligence model in a central project. This model has a lot of sensitive intellectual property and must be kept strictly isolated from the internet. You must expose the model endpoint only to a defined list of projects in your organization. What should you do?

A customer’s company has multiple business units. Each business unit operates independently, and each has their own engineering group. Your team wants visibility into all projects created within the company and wants to organize their Google Cloud Platform (GCP) projects based on different business units. Each business unit also requires separate sets of IAM permissions.

Which strategy should you use to meet these needs?

A customer needs to prevent attackers from hijacking their domain/IP and redirecting users to a malicious site through a man-in-the-middle attack.

Which solution should this customer use?

You need to use Cloud External Key Manager to create an encryption key to encrypt specific BigQuery data at rest in Google Cloud. Which steps should you do first?

A company is using Google Kubernetes Engine (GKE) with container images of a mission-critical application The company wants to scan the images for known security issues and securely share the report with the security team without exposing them outside Google Cloud.

What should you do?

Your organization s customers must scan and upload the contract and their driver license into a web portal in Cloud Storage. You must remove all personally identifiable information (Pll) from files that are older than 12 months. Also you must archive the anonymized files for retention purposes.

What should you do?

Your Google Cloud environment has one organization node, one folder named Apps." and several projects within that folder The organizational node enforces the constraints/iam.allowedPolicyMemberDomains organization policy, which allows members from the terramearth.com organization The "Apps" folder enforces the constraints/iam.allowedPolicyMemberDomains organization policy, which allows members from the flowlogistic.com organization. It also has the inheritFromParent: false property.

You attempt to grant access to a project in the Apps folder to the user testuser@terramearth.com.

What is the result of your action and why?

Your company is deploying a new application on GKE. The application handles sensitive customer data and is subject to strict data residency requirements. You need to ensure that the data is stored only within the europe-west4 region. What should you do?

Your organization deploys a large number of containerized applications on Google Kubernetes Engine (GKE). Node updates are currently applied manually. Audit findings show that a critical patch has not been installed due to a missed notification. You need to design a more reliable, cloud-first, and scalable process for node updates. What should you do?

You are migrating an on-premises data warehouse to BigQuery Cloud SQL, and Cloud Storage. You need to configure security services in the data warehouse. Your company compliance policies mandate that the data warehouse must:

• Protect data at rest with full lifecycle management on cryptographic keys

• Implement a separate key management provider from data management

• Provide visibility into all encryption key requests

What services should be included in the data warehouse implementation?

Choose 2 answers

Your organization is deploying a serverless web application on Cloud Run that must be publicly accessible over HTTPS. To meet security requirements, you need to terminate TLS at the edge, apply threat mitigation, and prepare for geo-based access restrictions. What should you do?

Your company's users access data in a BigQuery table. You want to ensure they can only access the data during working hours.

What should you do?

You need to set up two network segments: one with an untrusted subnet and the other with a trusted subnet. You want to configure a virtual appliance such as a next-generation firewall (NGFW) to inspect all traffic between the two network segments. How should you design the network to inspect the traffic?

A customer is running an analytics workload on Google Cloud Platform (GCP) where Compute Engine instances are accessing data stored on Cloud Storage. Your team wants to make sure that this workload will not be able to access, or be accessed from, the internet.

Which two strategies should your team use to meet these requirements? (Choose two.)

Your company has recently enabled Security Command Center at the organization level. You need to implement runtime threat detection for applications running in containers within projects residing in the production folder. Specifically, you need to be notified if additional libraries are loaded or malicious scripts are executed within these running containers. You need to configure Security Command Center to meet this requirement while ensuring findings are visible within Security Command Center. What should you do?

A customer’s internal security team must manage its own encryption keys for encrypting data on Cloud Storage and decides to use customer-supplied encryption keys (CSEK).

How should the team complete this task?

You are consulting with a client that requires end-to-end encryption of application data (including data in transit, data in use, and data at rest) within Google Cloud. Which options should you utilize to accomplish this? (Choose two.)

You are the project owner for a regulated workload that runs in a project you own and manage as an Identity and Access Management (IAM) admin. For an upcoming audit, you need to provide access reviews evidence. Which tool should you use?

Your company operates an application instance group that is currently deployed behind a Google Cloud load balancer in us-central-1 and is configured to use the Standard Tier network. The infrastructure team wants to expand to a second Google Cloud region, us-east-2. You need to set up a single external IP address to distribute new requests to the instance groups in both regions.

What should you do?

A company is backing up application logs to a Cloud Storage bucket shared with both analysts and the administrator. Analysts should only have access to logs that do not contain any personally identifiable information (PII). Log files containing PII should be stored in another bucket that is only accessible by the administrator.

What should you do?

An organization is starting to move its infrastructure from its on-premises environment to Google Cloud Platform (GCP). The first step the organization wants to take is to migrate its current data backup and disaster recovery solutions to GCP for later analysis. The organization’s production environment will remain on- premises for an indefinite time. The organization wants a scalable and cost-efficient solution.

Which GCP solution should the organization use?

Your company requires the security and network engineering teams to identify all network anomalies and be able to capture payloads within VPCs. Which method should you use?

Your organization has established a highly sensitive project within a VPC Service Controls perimeter. You need to ensure that only users meeting specific contextual requirements such as having a company-managed device, a specific location, and a valid user identity can access resources within this perimeter. You want to evaluate the impact of this change without blocking legitimate access. What should you do?

Your team wants to limit users with administrative privileges at the organization level.

Which two roles should your team restrict? (Choose two.)

You are working with a client that is concerned about control of their encryption keys for sensitive data. The client does not want to store encryption keys at rest in the same cloud service provider (CSP) as the data that the keys are encrypting. Which Google Cloud encryption solutions should you recommend to this client? (Choose two.)

You want to use the gcloud command-line tool to authenticate using a third-party single sign-on (SSO) SAML identity provider. Which options are necessary to ensure that authentication is supported by the third-party identity provider (IdP)? (Choose two.)

Your financial services company needs to process customer personally identifiable information (PII) for analytics while adhering to strict privacy regulations. You must transform this data to protect individual privacy to ensure that the data retains its original format and consistency for analytical integrity. Your solution must avoid full irreversible deletion. What should you do?

You are creating a new infrastructure CI/CD pipeline to deploy hundreds of ephemeral projects in your Google Cloud organization to enable your users to interact with Google Cloud. You want to restrict the use of the default networks in your organization while following Google-recommended best practices. What should you do?

Your company runs a website that will store PII on Google Cloud Platform. To comply with data privacy regulations, this data can only be stored for a specific amount of time and must be fully deleted after this specific period. Data that has not yet reached the time period should not be deleted. You want to automate the process of complying with this regulation.

What should you do?

Your privacy team uses crypto-shredding (deleting encryption keys) as a strategy to delete personally identifiable information (PII). You need to implement this practice on Google Cloud while still utilizing the majority of the platform’s services and minimizing operational overhead. What should you do?

You are a security administrator at your company. Per Google-recommended best practices, you implemented the domain restricted sharing organization policy to allow only required domains to access your projects. An engineering team is now reporting that users at an external partner outside your organization domain cannot be granted access to the resources in a project. How should you make an exception for your partner's domain while following the stated best practices?

A large financial institution is moving its Big Data analytics to Google Cloud Platform. They want to have maximum control over the encryption process of data stored at rest in BigQuery.

What technique should the institution use?

Which two security characteristics are related to the use of VPC peering to connect two VPC networks? (Choose two.)

You are working with a network engineer at your company who is extending a large BigQuery-based data analytics application. Currently, all of the data for that application is ingested from on-premises applications over a Dedicated Interconnect connection with a 20Gbps capacity. You need to onboard a data source on Microsoft Azure that requires a daily ingestion of approximately 250 TB of data. You need to ensure that the data gets transferred securely and efficiently. What should you do?

You are asked to recommend a solution to store and retrieve sensitive configuration data from an application that runs on Compute Engine. Which option should you recommend?

Your company has deployed an application on Compute Engine. The application is accessible by clients on port 587. You need to balance the load between the different instances running the application. The connection should be secured using TLS, and terminated by the Load Balancer.

What type of Load Balancing should you use?

A database administrator notices malicious activities within their Cloud SQL instance. The database administrator wants to monitor the API calls that read the configuration or metadata of resources. Which logs should the database administrator review?